Summary:

- NVIDIA stock soared 14% primarily because of strong Q4 and guidance.

- NVIDIA’s GPU dominance in Artificial Intelligence (“AI”) has positioned the company in the realm of Generative AI, supplying chips for much-ballyhooed ChatGPT.

- TSMC makes NVIDIA’s GPUs for ChatGPT as well as AI accelerators for several other companies that are moving to their own Chatbot services.

Tom Merton

NVIDIA (NASDAQ:NVDA) reported revenue for the fourth quarter ended January 29, 2023, of $6.05 billion, down 21% from a year ago and up 2% from the previous quarter. GAAP earnings per diluted share for the quarter were $0.57, down 52% from a year ago and up 111% from the previous quarter.

While YoY revenue and earnings growth was lackluster to say the least, QoQ growth was positive, indicating momentum in the company’s top and bottom lines. Importantly, guidance was even better as revenue outlook for the first quarter of fiscal 2024 is expected to be $6.50 billion, plus or minus 2% vs. $6.32 billion consensus and $6.05 billion for the recent quarter. As a result, NVDA shares surged, increasing 14% at the close.

While the data center remains strong for NVDA with a GPU market share of 95%, the company’s dominance in Artificial Intelligence (“AI”) has positioned the company in the realm of Generative AI. I detailed NVDA’s AI business extensively in a September 6, 2022 Seeking Alpha article entitled “Nvidia: Biden Targeting China On The AI Front.”

By definition:

“Generative AI refers to a category of artificial intelligence (AI) algorithms that generate new outputs based on the data they have been trained on. Unlike traditional AI systems that are designed to recognize patterns and make predictions, generative AI creates new content in the form of images, text, audio, and more.”

But the key to NVDA’s position in Generative AI is by supplying its GPU chips to San Francisco-based startup OpenAI. OpenAI was co-founded in 2015 by Elon Musk and Sam Altman, and in November 2022 unveiled ChatGPT. The move brought AI from an “egghead” concept to a technology that everyday people could use. So popular was the conception that ChatGPT signed up 1 million users in the five days after its release, according to a Dec. 5 tweet from Altman.

According to OpenAI, ChatGPT is “an AI-powered chatbot developed by OpenAI, based on the GPT (Generative Pretrained Transformer) language model. It uses deep learning techniques to generate human-like responses to text inputs in a conversational manner.”

Microsoft (MSFT) is OpenAI’s biggest investor with a recent $10 billion investment, and has integrated ChatGPT in its Bing search engine and Edge browser, further intensifying competition with Google (GOOG) (GOOGL). The Alphabet Inc. company recently unveiled Bard to compete with ChatGPT.

Popularity for the masses grew as ChatGPT took college campuses by storm when students began using it to write term papers. It is one of several examples of generative AI. These are tools that allow users to enter written prompts and receive new human-like text or images and videos generated by the AI.

ChatGPT is a chatbot which, by definition, is a computer program designed to simulate a conversation with human users, especially on the internet. It’s not a new concept. The first Chatbot was made back in 1966 and called ELIZA written by German-American computer scientist Joseph Wizenbaum at the MIT Artificial Laboratory.

NVDIA’s Opportunity with ChatGPT

ChatGPT uses NVIDIA’s A100 GPU with the Ampere GA100 core. The A100 HPC accelerator is a $12,500 tensor core GPU that features high performance, HBM2 memory (80GB of it) capable of delivering up to 2TBps memory bandwidth, enough to run very large models and datasets. The A100 is commonly found in packs of eight, which is what Nvidia included in the DGX A100, its own universal AI system that had a sticker price of $199,000.

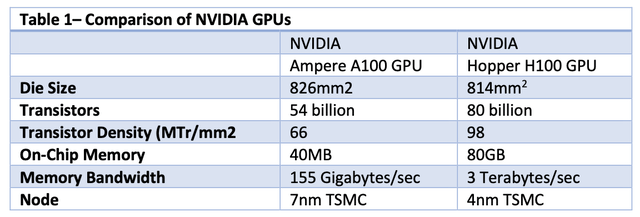

Now, ChatGPT is already counting on Nvidia H100 based on the core Hopper GH100. Nvidia says that it can train AI at up to 9x faster than an A100. The price of an H100 is approximately 1.75X higher than an A100. Table 1 compares the performance of the A100 and H100.

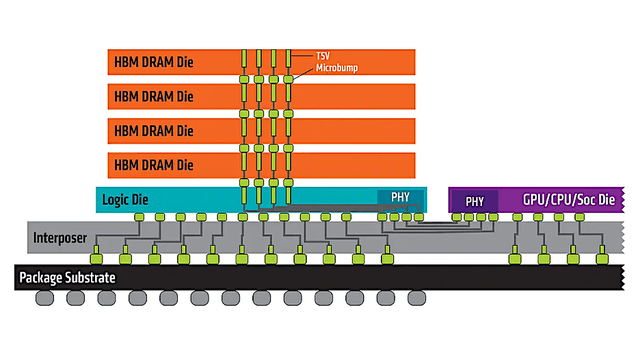

Chart 1 is an illustration of HBM2 memory. The second generation of High Bandwidth Memory, HBM2, also specifies up to eight dies per stack and doubles pin transfer rates up to 2 GT/s. Retaining 1024‑bit wide access, HBM2 is able to reach 256 GB/s memory bandwidth per package. The HBM2 spec allows up to 8 GB per package.

Up to eight DRAM dies are stacked and connected to the memory controller on a GPU or CPU through a substrate, such as a silicon interposer. Within the stack the die are vertically interconnected by through-silicon vias (“TSVs”) and microbumps.

On January 19, 2016, Samsung Electronics (OTCPK:SSNLF) announced early mass production of HBM2, at up to 8 GB per stack. SK Hynix (OTC:HXSCL) also announced availability of 4 GB stacks in August 2016.

Chart 1

Historically, the first HBM memory chip was produced by SK Hynix in 2013, and the first devices to use HBM were the AMD (AMD) Fiji GPUs in 2015. Being first, hynix remains the industry leader. Hynix has released a first-generation HBM, a second-generation HBM (HBM2), a third-generation HBM (HBM2E), and a fourth-generation (HBM3) and has secured a market share of 60-70 percent.

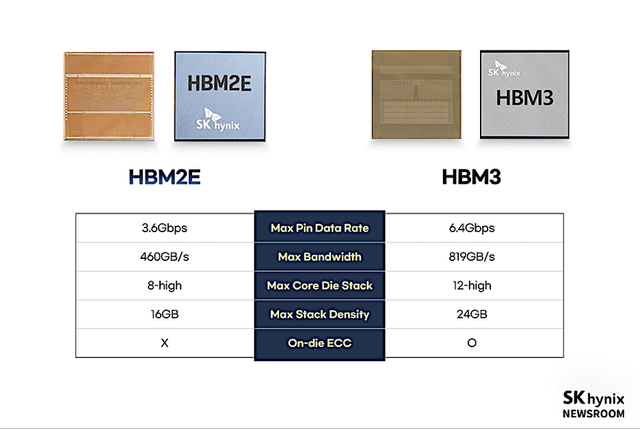

HBM2 debuted in 2016, and the spec was updated in early 2020, and named “HBM2E”. Chart 2 compares the properties of HBM2E and HBM3. The latter was introduced in January 2022. HBM3 offers several enhancements over HBM2E, particularly the doubling of bandwidth from HBM2E at 3.6 Gbps up to 6.4Gbps for HBM3, or 819 GBps of bandwidth per device.

Chart 2

With these improvements, HBM3 first appeared in NVIDIA’s “Hopper” H100 enterprise GPU, then with products from Intel (INTC) and AMD.

HBM Production Process

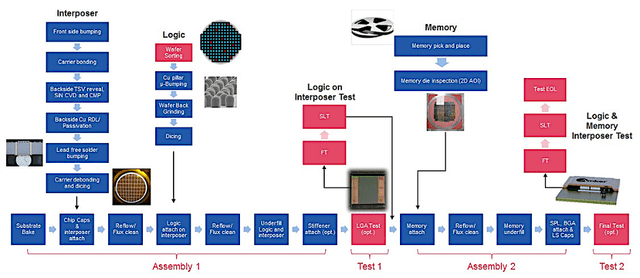

The production process to make HBM is the assembly of several components by different manufacturers. Logic (GPU, CPU) and DRAM Memory are supplied in wafer form, as well as the interposer and any additional components to an outsourced semiconductor assembly and test service provider that then bonds the components to the interposer and then the assembly to the package substrate.

Chart 3

TSMC’s Opportunity with ChatBots

The key issue in this analysis is that ChatGPT runs on NVIDIA’s A100 and H100 processors and that Taiwan Semiconductor (TSMC) (TSM) makes the A100 and H100 with its 7nm and 4nm processes, respectively.

While ChatGPT is the sweetheart of the generative AI market along with its chip suppliers NVIDIA and SK Hynix being the greatest beneficiaries, there are other developments that represent an opportunity for TSMC as several other companies are moving to their own Chatbot services. These include:

- In February 2023, Google began introducing an experimental service called “Bard” which is based on its LaMDA AI program.

- China’s Baidu (NASDAQ:BIDU) is also developing an AI-powered chatbot similar to ChatGPT called “Ernie bot,” expected to launch next month.

- Microsoft said it would integrate ChatGPT access via its Azure cloud “soon,” but did not give a specific date.

- The South Korean search engine firm Naver announced in February 2023 that they would be launching a ChatGPT-style service called “SearchGPT” in Korean in the first half of 2023.[

- The Russian search engine firm Yandex announced in February 2023 that they would be launching a ChatGPT-style service called “YaLM 2.0” in Russian before the end of 2023.

These alternatives have their hardware options, including Google Tensor Processing Units (TPUs); AMD Instinct GPUs; AWS Graviton 4 chips; and AI accelerators from startups like Cerebras, Sambanova, and Graphcore.

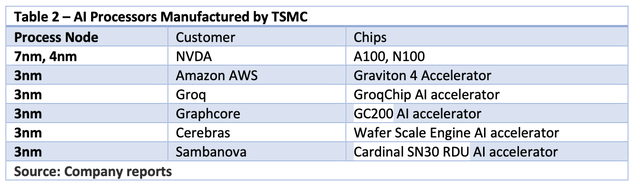

But so far, few of these new chips have taken significant market share. The two exceptions to watch are Google, whose TPUs have gained traction in the Stable Diffusion community. Nevertheless, TSMC manufacture all of the chips listed above, as shown in Table 2.

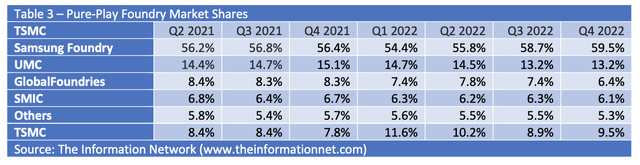

TSMC dominates the pure-play foundry market, with more than a 55% share, as shown in Table 3, well ahead of Samsung (OTCPK:SSNLF) with a share under 15%. Its dominance in the foundry market makes it a clear choice for AI accelerator designers to choose TSMC at these small nodes. Advanced technologies, defined as 7 nanometer and lower, accounted for 54% of total wafer revenue in Q4 2022.

Investor Takeaway: NVDA and TSMC Stock Ratings

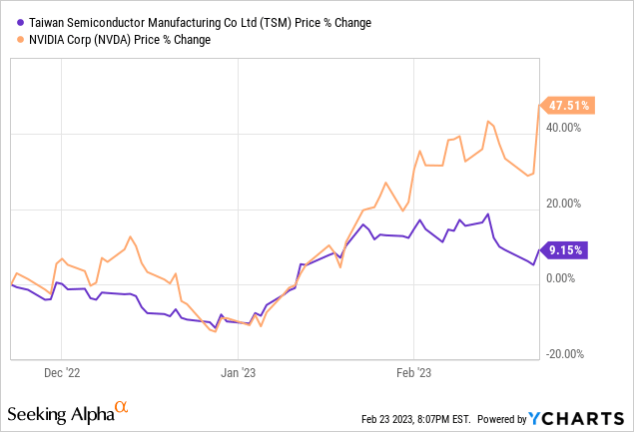

Chart 4 shows share price of TSMC and NVIDIA for the 1-year period. Share price tracked for both until mid-January 2023 when the share price of NVIDIA started increasing on positive comments by several analysts followed by publicity around ChatGPT.

TSMC was flat as a result of weak guidance with a forecast for Q1 2023 for revenue to be in a range of $16.7 billion to $17.5 billion, down sequentially from Q4 2022 and down as much as 5% from the $17.6 billion reported in Q1 2022. Profit margins will fall as well, with operating margin expected to be in a range of 41.5% to 43.5%, compared to 45.6% the year prior. Geopolitical concerns with Mainland China have been a headwind for TSMC in the past few weeks starting with the Chinese balloon over the U.S.

YCharts

Chart 4

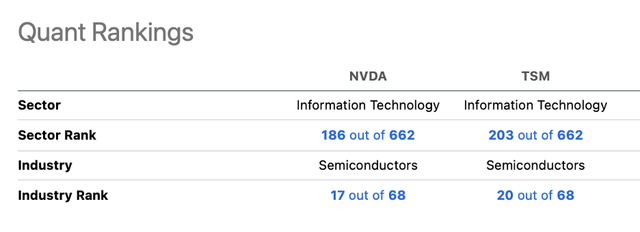

But both companies are synergistic. In 2021, NVIDIA was among the Top 5 customers of TSMC, representing 7.6% of total revenues. Chart 5 shows Seeking Alpha Quant Rankings were comparable for the two companies.

Chart 5

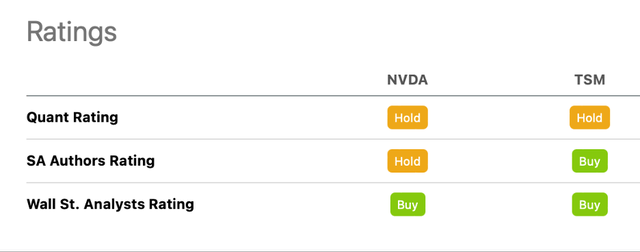

Chart 6 also shows comparable Quant Ratings and Wall Street Analyst Ratings. As for SA Author Ratings, I rate TSM a Buy, discussing my analysis in a January 9, 2023 Seeking Alpha article entitled “TSMC: My Top Pick In 2023 As It Dominates Samsung Electronics And Intel Foundries.” I rate NVDA a Hold, based on macro-related factors that present a headwind for the company with a sequential downturn at the data center.

Chart 6

Disclosure: I/we have no stock, option or similar derivative position in any of the companies mentioned, and no plans to initiate any such positions within the next 72 hours. I wrote this article myself, and it expresses my own opinions. I am not receiving compensation for it (other than from Seeking Alpha). I have no business relationship with any company whose stock is mentioned in this article.

This free article presents my analysis of this semiconductor sector. A more detailed analysis is available on my Marketplace newsletter site Semiconductor Deep Dive. You can learn more about it here and start a risk free 2 week trial now.